Raspberry Pi OS Bullseye 版本发布时弃用的 Picamera 以新面貌回归了,那就是 Picamera2 库。

下面介绍使用树莓派摄像头模块,用 Picamera2 库和 TensorFlow Lite 实现实时对象检测。

安装 Picamera2 库

因为目前 Picamera2 库还处于预览版,因此暂时只能通过 GitHub 编译安装。

$ sudo apt update $ sudo apt install -y libboost-dev $ sudo apt install -y libgnutls28-dev openssl libtiff5-dev $ sudo apt install -y qtbase5-dev libqt5core5a libqt5gui5 libqt5widgets5 $ sudo apt install -y meson $ sudo pip3 install pyyaml ply $ sudo pip3 install --upgrade meson $ sudo apt install -y libglib2.0-dev libgstreamer-plugins-base1.0-dev $ git clone --branch picamera2 https://github.com/raspberrypi/libcamera.git $ cd libcamera $ meson build --buildtype=release -Dpipelines=raspberrypi -Dipas=raspberrypi -Dv4l2=true -Dgstreamer=enabled -Dtest=false -Dlc-compliance=disabled -Dcam=disabled -Dqcam=enabled -Ddocumentation=disabled -Dpycamera=enabled $ ninja -C build $ sudo ninja -C build install $ cd ~ $ git clone https://github.com/tomba/kmsxx.git $ cd kmsxx $ git submodule update --init $ sudo apt install -y libfmt-dev libdrm-dev $ meson build $ ninja -C build $ cd ~ $ sudo pip3 install pyopengl $ sudo apt install python3-pyqt5 $ git clone https://git@github.com:raspberrypi/picamera2.git $ sudo pip3 install opencv-python==4.4.0.46 $ sudo apt install -y libatlas-base-dev $ sudo pip3 install numpy --upgrade $ cd ~ $ git clone https://github.com/RaspberryPiFoundation/python-v4l2.git

要跑起来还需要设置 PYTHONPATH 运行环境。比如你需要将下面的内容添加到 .bashrc 文件中。

export PYTHONPATH=/home/pi/picamera2:/home/pi/libcamera/build/src/py:/home/pi/kmsxx/build/py:/home/pi/python-v4l2

安装 TensorFlow Lite

由于我们将通过 Python 代码进行推理而不是训练,因此我们可以安装轻量级 TensorFlow Lite 运行时库以及我们需要的其他一些东西:

$ sudo apt install build-essentials $ sudo apt install git $ sudo apt install libatlas-base-dev $ sudo apt install python3-pip $ pip3 install tflite-runtime $ pip3 install opencv-python==4.4.0.46 $ pip3 install pillow $ pip3 install numpy

开始使用 TensorFlow Lite

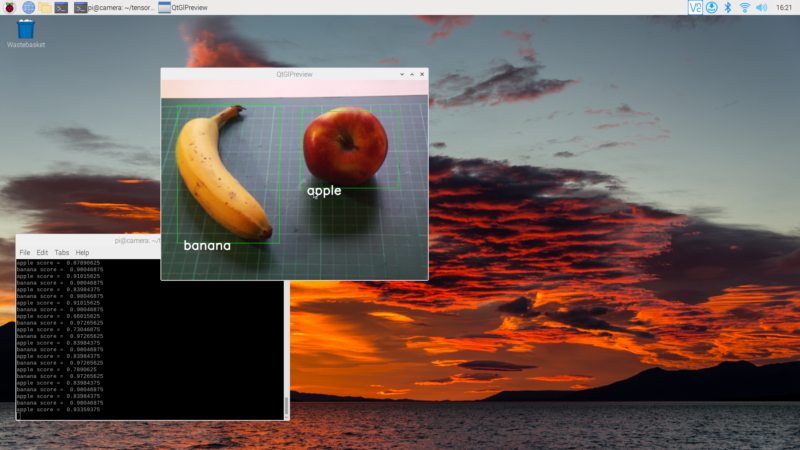

安装完所有东西之后,下面我们构建一个演示程序:寻找图像中的苹果和香蕉。

代码会启用摄像头,并将采集到的图像不断传给 TensorFlow 的图像缓冲区。TensorFlow 随之在图像上进行对象检测。如果检测到任何对象,将用矩形框进行标注。

import tflite_runtime.interpreter as tflite

import sys

import os

import argparse

import cv2

import numpy as np

from PIL import Image

from PIL import ImageFont, ImageDraw

from qt_gl_preview import *

from picamera2 import *

normalSize = (640, 480)

lowresSize = (320, 240)

rectangles = []

def ReadLabelFile(file_path):

with open(file_path, 'r') as f:

lines = f.readlines()

ret = {}

for line in lines:

pair = line.strip().split(maxsplit=1)

ret[int(pair[0])] = pair[1].strip()

return ret

def DrawRectangles(request):

stream = request.picam2.stream_map["main"]

fb = request.request.buffers[stream]

with fb.mmap(0) as b:

im = np.array(b, copy=False, dtype=np.uint8).reshape((normalSize[1],normalSize[0], 4))

for rect in rectangles:

print(rect)

rect_start = (int(rect[0]*2) - 5, int(rect[1]*2) - 5)

rect_end = (int(rect[2]*2) + 5, int(rect[3]*2) + 5)

cv2.rectangle(im, rect_start, rect_end, (0,255,0,0))

if len(rect) == 5:

text = rect[4]

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(im, text, (int(rect[0]*2) + 10, int(rect[1]*2) + 10), font, 1, (255,255,255),2,cv2.LINE_AA)

del im

def InferenceTensorFlow( image, model, output, label=None):

global rectangles

if label:

labels = ReadLabelFile(label)

else:

labels = None

interpreter = tflite.Interpreter(model_path=model, num_threads=4)

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

height = input_details[0]['shape'][1]

width = input_details[0]['shape'][2]

floating_model = False

if input_details[0]['dtype'] == np.float32:

floating_model = True

rgb = cv2.cvtColor(image,cv2.COLOR_GRAY2RGB)

initial_h, initial_w, channels = rgb.shape

picture = cv2.resize(rgb, (width, height))

input_data = np.expand_dims(picture, axis=0)

if floating_model:

input_data = (np.float32(input_data) - 127.5) / 127.5

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()

detected_boxes = interpreter.get_tensor(output_details[0]['index'])

detected_classes = interpreter.get_tensor(output_details[1]['index'])

detected_scores = interpreter.get_tensor(output_details[2]['index'])

num_boxes = interpreter.get_tensor(output_details[3]['index'])

rectangles = []

for i in range(int(num_boxes)):

top, left, bottom, right = detected_boxes[0][i]

classId = int(detected_classes[0][i])

score = detected_scores[0][i]

if score > 0.5:

xmin = left * initial_w

ymin = bottom * initial_h

xmax = right * initial_w

ymax = top * initial_h

box = [xmin, ymin, xmax, ymax]

rectangles.append(box)

if labels:

print(labels[classId], 'score = ', score)

rectangles[-1].append(labels[classId])

else:

print ('score = ', score)

def main():

parser = argparse.ArgumentParser()

parser.add_argument('--model', help='Path of the detection model.', required=True)

parser.add_argument('--label', help='Path of the labels file.')

parser.add_argument('--output', help='File path of the output image.')

args = parser.parse_args()

if ( args.output):

output_file = args.output

else:

output_file = 'out.jpg'

if ( args.label ):

label_file = args.label

else:

label_file = None

picam2 = Picamera2()

preview = QtGlPreview(picam2)

config = picam2.preview_configuration(main={"size": normalSize},

lores={"size": lowresSize, "format": "YUV420"})

picam2.configure(config)

stride = picam2.stream_configuration("lores")["stride"]

picam2.request_callback = DrawRectangles

picam2.start()

while True:

buffer = picam2.capture_buffer("lores")

grey = buffer[:stride*lowresSize[1]].reshape((lowresSize[1], stride))

result = InferenceTensorFlow( grey, args.model, output_file, label_file )

if __name__ == '__main__':

main()

请问tflite和label文件怎么下载